The Good, the Bad and the Ugly for Insurance Innovation

“You are not an individual. You are a data cluster.”

These were the ominous words that led a 2014 Ubisoft marketing campaign for a game that focused on hackers and how vulnerable the personal data that makes up your digital footprint can be to anyone with the right skills. I found this a pretty provocative, thought-provoking and downright existential statement at the time. Working in marketing — an industry that makes direct use of personal data to target relevant advertising and messaging. Indeed, while this was focused on data being stolen by hackers, I’m struck by how many of the talking points also apply to how businesses and particular industries could use personal data.

The general trend, as I’ve written about in regards to the coming data regulations of GDPR and PSD2 is of top-down regulations dictating the corporate use (and misuse) of personal information that doesn’t have the explicit consent of the individual. The wider (and more philosophical) debate here though (and I love a good one of those) relating to the quote I’ve opened with is whether something as abstract, as multifaceted and complex as a human being (and everything that makes up their personality, habits and interests) can (even in theory) be accurately broken down into a data set.

What I’m not going to try and do is go anywhere near that debate. What I’m actually going to do is talk about a really interesting related trend (the “Quantified Self”) and how it relates to increasing investment in AI and associated tech in the insurance sector.

So what is the Quantified Self? Whether it’s fitness trackers, DNA testing, location geo-tagging or any number of other recent trends, many people are actively and deliberately generating the kinds of data that expands their digital shadow. Also known as “life-logging”, many people even openly share this data with the world.

– Your diet

– The quality of your sleep

– Your driving habits

– Your level of physical activity

– Your body fat percentage

– The speed at which you eat

– The quality and consistency of your posture

– Your blood chemistry

– Your mood

– Your sex life (yep!)

– The duration of your computer or device usage….

All of this (and much more) can now be monitored by various apps and platforms if people seek them out and we’re still only in the very early stages of what is possible. This is occurring at the same time as increasing business investment into technologies that can make direct use of this kind of data. I’m going to look at insurance in particular, as there are potentially huge implications for this kind of personal data generation.

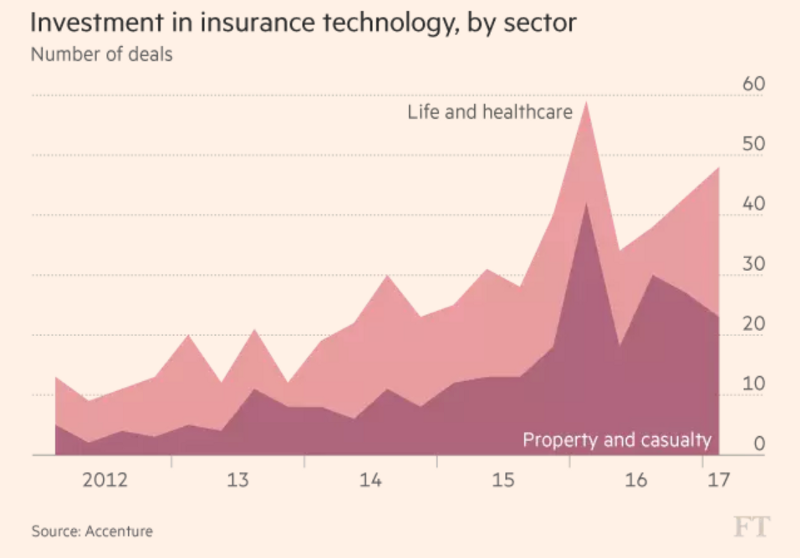

(image from https://www.ft.com/content/e07cee0c-3949-11e7-821a-6027b8a20f23)

The Good 🙂

There are good reasons that some people have embraced life logging. While some will see their life represented in numbers as somehow dehumanising, others will see accountability. When trying to lose weight, increase healthy behaviours or to measure any other kind of ‘performance’, being able to assess more accurately than ‘gut-feel’ whether you’re achieving your goals is a powerful step towards making progress. Having your exercise broken down into data even allows you to gamify the activity and make the activity more fun.

Similarly, many argue that viewing healthcare as proactive prevention as much as a post-illness cure is a healthy perspective to take. That logic would extend to mean any data that helps you take a higher degree of personal accountability for your health is a net positive. The key here is that this data is being used to help the individual achieve something. There‘s lot of faith placed in the organisations that generate and store this data however, in terms of how private it is and how it could potentially be used now or in the future.

The Bad 🙁

So what’s the potential downside to having this personal data floating around? Much of the above has ethical considerations even beyond what might not be immediately obvious at first glance. Take health insurance for example. While some would take the stance that being able to receive a cheaper premium in exchange for proving they are engaging in regular exercise and other healthy behaviours, many others would consider it highly invasive to be monitored in this way whether it be used in their favour or otherwise.

The ethical minefields are everywhere. With easily accessible DNA analysis comes the potential discovery of predispositions to particular diseases. To many people, this will redefine an experience they were perhaps using to simply learn about their ancestry. It would be unethical to keep this information from the individual, but it also feels irresponsible to not provide a level of help for anyone that receives this kind of information as to what this means for them. It also begs the question of whether this would come under the same sense of ‘pre-existing conditions’ individuals need to disclose at the point of taking out a health insurance policy.

The Downright Ugly :-/

The sense that people should be selective over the information they share on public forums such as social media channels is already well-established in terms of the impression potential employers might get of them. But what if this extended to the organisation that held their life insurance policy? What if those pub selfies, trendy bar check-ins and “food porn” photos could be used as more accurate health indicators than using traditional methods? This feels closer to a Black Mirror-style dystopia than a brand that wants to have a trusting relationship with its customers.

Some people may read this article and think we’re years away from any of this becoming reality, but there’s already a startup in North Carolina working on calculating insurance premiums with a selfie. Using an image to look for things like how quickly you’re ageing, body mass index and indications of smoking; this company believes it can accurately generate personalised insurance quotes.

What’s Next?

This is clearly a debate with a lot of nuance to it. Insurance as an industry has always been based on statistical analysis of risk, but the speed at which technology will provide the ability to be discriminatory on a highly personalised level — I’m sure many will find uncomfortable.

Everything I’ve discussed in this article will soon be (or already is) possible. There are big decisions to be made by insurance firms. On the one hand, they risk falling behind in a technology arms race as AI and other tech offers the kinds of efficiency and financial savings already being seen across other areas of Financial Services. On the other hand, they risk alienating customers if they overstep the (potentially uncertain) mark in terms of how they go about building products and tools that use data to provide these savings.

I certainly don’t want to create the impression that I think insurance tech is a no-go — there’s some great work being done. Just a few examples… Lemonade in the US is providing a new way of delivering a quick and easy service for home insurance using an AI-powered chatbot.

Similarly, Trov is taking a fresh approach to insurance, providing instant and on-demand coverage for key belongings. The concept, as explained by Scott Walchek, the founder and CEO of Trov, is that the startup makes insurance “as simple as Tinder and as beautiful as Airbnb.” The delightfully British-sounding startup, Cuvva, provides a platform for short-term vehicle coverage for those times you simply want to borrow someone’s car for a few hours. Cuvva also helps people that own vehicles but drive limited miles, by scaling the cost of insurance with your mileage. The key correlation between what I already see working is that it’s not invasive and the upfront benefit of using the service is immediately obvious to a potential user.

Takeaways:

All the apps, devices and platforms riding the wave of the quantified-self prove that many people are willing to share and have their personal data used — providing that they clearly see how they benefit from it and it’s used in a smart, relevant way. If a product can help someone achieve something, be that being healthier, saving money or time etc then disclosing the data to enable that process is largely seen as an acceptable compromise.

However, we’re rapidly reaching the point where it’s possible to build tools that offer far more accurate profiling and (highly) individual risk assessments that benefit insurance providers more than the individuals involved. Maybe because I’m cynical I can’t help but imagine some organisations will go straight ahead and build just these kinds of tools… For that reason, this will be an important testing ground for whether regulations such as GDPR are actually enforced with the rigour required to hamper this kind of development.

But with the right approach to product development, there is also an amazing opportunity in this sector without going down this way of thinking. Looking back at the quote I opened this article with, AI & related tech offers insurance providers with the chance to build products that help them truly service their customers as individuals, and not just treat them as data clusters.

(Enjoyed reading this article? This was originally published on Kyan Insights)