A few months back in a Bournemouth boardroom, a team stopped arguing about which creative to run and pulled up an AI heatmap. Within seconds, it predicted which version would hold attention. It was right. More importantly, it changed the way decisions were made in the room.

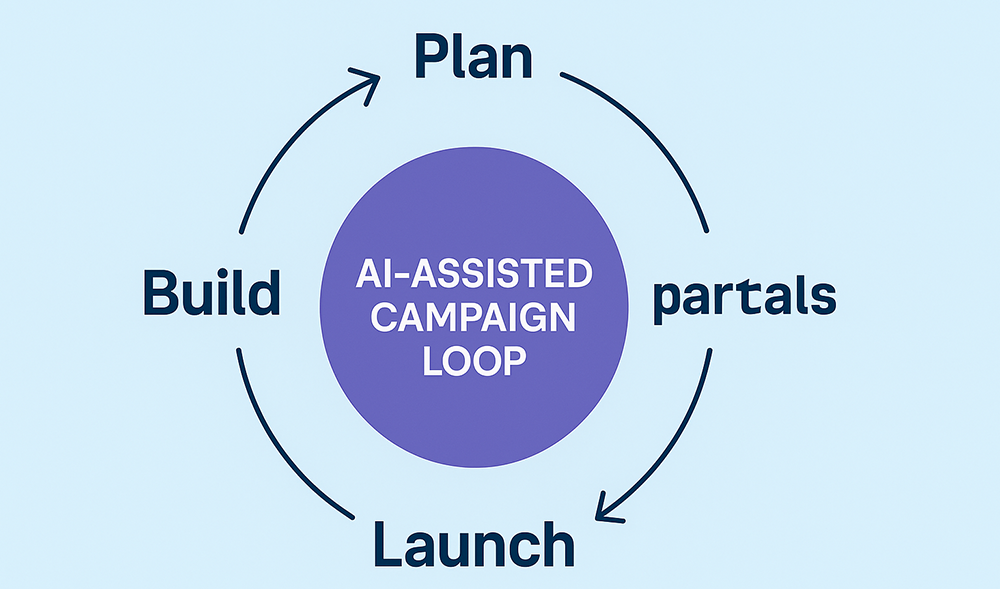

That’s the reality now. AI isn’t a futuristic add-on; it’s an integral part of the workflow, shaping real-time decisions. From Poole retailers clustering new customer segments to national brands spinning up ad copy in hours, the pace of campaigns has shifted. Planning cycles that used to take months are now compressed into days.

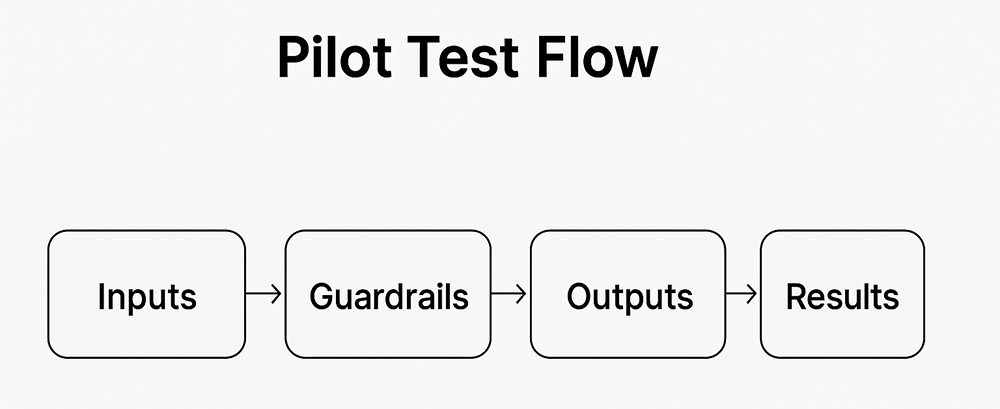

That speed is exciting. It means you can test more, learn faster, and move budgets with confidence. But it’s also risky. Move too quickly without strategy or guardrails, and you end up with expensive noise, not growth.

This playbook isn’t hype. It’s a practical guide to what’s actually changing, the myths to ignore, and the steps you can use to run AI pilots safely and profitably.

When people discuss “AI in marketing,” it is often portrayed as holding considerable promise: faster, smarter, and cheaper. The truth is more specific. Here’s what I see shifting most in my day-to-day life.

AI clustering surfaces groups planners often miss, sometimes entire micro-segments. That’s precision you can’t afford to ignore. The trade-off is opacity: you may not fully understand why the machine groups people together, and that’s where bias and compliance risks arise.

Campaign teams can now spin up dozens of variations in a single afternoon. That’s a huge time-saver. But quantity doesn’t equal quality. The creative debate hasn’t disappeared, it’s just changed: the question isn’t “what should we make?” but “which of these AI variants fits our brand and tone?”

AI can run “what-if” models in real time, suggesting daily budget shifts instead of quarterly ones. That’s agility, but agility without strategy is just chasing platform noise.

The granularity is new: you can see which creative elements contribute, which audiences are saturating, and which levers move the dial. But attribution myths linger. You still need incrementality testing, holdouts, and human interpretation.

Myths to park now:

AI runs campaigns. It accelerates; it doesn’t replace judgment.

More variants = better results. Without good evaluation, you’re just burning money faster.

Attribution is solved. It isn’t. Pair AI with proper testing methods.

Data in, magic out. Consent and quality matter more than volume.

One vendor will do everything. Keep your stack modular and your exit routes open.

Talking about AI is easy. Using it in campaigns without losing your shirt is harder. These six steps are how I help teams go from pilot to practice.

Define what “good” means. A Poole retailer didn’t just say “use AI”, they set a clear goal: reduce cost per acquisition by 15% without damaging brand trust. That gave everyone a North Star and some red lines.

Watch out: If it isn’t written down, you’ll never know if you’ve won.

AI magnifies whatever you feed it. A travel brand swore their lists were solid. Within minutes, we found duplicates, expired consents, and “unknown source” tags – garbage in, garbage out, only faster.

Watch out: Don’t skip the audit. Clean, consented data is the foundation.

Pick one or two high-impact use-cases and decide the mode:

Copilot: AI assists, humans approve (e.g. subject lines in finance).

Template: AI produces standardised outputs (e.g. brand-on visual prompts).

Automation: AI executes with guardrails (e.g. low-risk remarketing copy).

A Bournemouth start-up chose just one use-case, ad copy variants. Three weeks later, they had 3× more creative to test and a measurable lift.

Watch out: Five experiments at once = unreadable results.

Write a one-page plan: control/holdout, sample size, stopping rule. Add brand rules: tone, banned claims, human sign-off. A fashion brand did this by holding back 10% of its spend as a control. Two weeks later, the AI-assisted ads delivered +18% CTR, and the result was credible because the plan was clean.

Watch out: No success criteria? Expect a shouting match later.

Internal tests are fine for practice, but real insight comes from live campaigns. One start-up ran a small geo-split, spending modestly across six regions, with one held back. The signal was clear: two AI-generated variants consistently won. But the real learning came when we checked against revenue, not just clicks.

Watch out: Dashboards look pretty; business metrics tell the truth.

Turn wins into process. Capture prompts, document workflows, and train the team. A Dorset SME did this with social ad copy. When their intern left, the playbook survived. Then scale in stages, adding monitoring and drift checks.

Watch out: Scaling a fragile win is how small victories become expensive failures.

A mid-sized Dorset retailer was facing rising ad costs and creative fatigue. Their Facebook campaigns looked tired, conversions were slipping, and budgets were under pressure.

Instead of trying to “AI everything,” we set one objective: lift conversion while cutting CPA.

Setup: AI could suggest, but not decide. We created a small prompt kit to ensure outputs remain on-brand.

Method: 6 regions, 1 held back as control; modest spend to limit risk.

Result: two AI-assisted creatives outperformed the human-written versions. CPA fell 12% across test regions.

Lesson: the metric mattered, but the bigger win was process. The team left with a reusable playbook, prompts, checks, and a rhythm they could repeat. One copywriter summed it up perfectly: “It’s like having a junior assistant throwing out ideas, half binned, a few are gold.”

This is where I see most teams stumble. Vendors are slick. They’ll show you a dashboard that makes it appear as though every problem is solved. Six months later, the licence gathers dust because the team never integrated it.

Build when you need control (sensitive data, unique workflows). A financial services client in London built their own tools just to keep data behind the firewall.

Buy when speed matters. A Bournemouth start-up plugged into a paid creative tool and was testing new ads within days.

Whichever you choose, be ruthless:

– Where is data stored, and for how long?

– Can you export prompts and workflows if you leave?

– What brand controls does it give you?

– What’s the pricing model, and does it balloon at scale?

One Dorset client nearly signed a three-year deal until we discovered the vendor blocked data export. That’s not software, that’s a trap.

You won’t transform everything in three months, but you’ll know if you’re on track.

Faster cycles: briefs to live ads in days, not weeks.

Repeatable use-cases: one or two workflows you can trust.

Prompt library: reliable starting points for creative.

Evidence: at least one clean incrementality test to prove lift.

Guardrails: clear approvals and banned claims baked in.

At this stage, you’re not chasing novelty. You’re building confidence that experiments generate insight, that the team can use AI responsibly, and that scaling won’t break the system.

AI is an accelerant. It speeds things up, expands options, and sharpens the view. But it doesn’t replace campaign strategy; it reshapes it.

The winners won’t be the teams with the fanciest dashboards. They’ll be the ones who ask sharper questions, design smarter tests, and scale responsibly.

Start small. Prove value in one place. Capture the process. Then scale.

That’s the future of campaign strategy. Not hype, not fear, but structured progress. And the best time to start is now.

Paul Giles is Director of Digital Hype, a digital marketing agency based in Bournemouth, working with businesses across Dorset and the UK.